The face is the window to the emotion of on-screen characters. When you watch a movie the expressiveness or lack-of will tell the audience what the characters are going though and what they are feeling.

This has always been a problem when it comes to video games. From the first games on the Atari, when the characters lacked any emotional visual cues, interpretation was the only tool to help build a connection. Now that games are moving more and more into a realistic visual style emotion is more important then ever. Seeing a character on-screen with dead eyes or puppet like mouths is no longer permitted and can destroy emersion for the viewer.

This is where Image Metrics and Faceware comes in. They use technology that can take a video shot for voice over (VO) or a video shot of an actor acting and place that on a video game character. The outcome is remarkable, characters that emote in believable ways allow for game studios to focus on great story and great game-play without the fear pulling viewers outside the suspension of disbelief. While at GDC C&G Monthly was lucky enough to sit down with Peter Busch, Technical Account Director, and discuss what makes Image Metrics so unique and how the new product Faceware can allow even independent studios the chance to craft a game that will draw people in and keep them engaged.

Can you tell us a little bit more about Image Metrics?

We have two different business’s, a consumer side and a professional side. Faceware is on the professional side. Essentially Faceware has been used internally as a service for the last 8 years as a stand alone product that we can give to game development studios, film studios, television, and essentially anyone that is making character animation. It is driven off of a performance.

We take a piece of performance capture, we shoot video of an actor or actress, weather it is on a motion capture stage or a voice over booth or out in the open. All the software needs is a single video feed: a face. So we capture the video completely marker-less. What the technology is built on is facial recognition. We flag parts on each frame of video and select what pixels are eye pixes and what pixels are mouth pixels – the jaw line, the bridge of the nose and the brow. If we know where the pixels are in every frame of video we know where the rest of the face is. So we can derive head movement, head pose, and natural lip sync from this information.

What the animator will do is go in and – per character – define the relationship with that character to the actor. It does not need to be a human, it can be a monster, it can be a shampoo bottle – as long as it has a face. Essentially they teach the technology when this actor smiles in the video to rig smiles.

On the consumer side we recently acquired a company called Big Stage. What Big Stage does is avatar creation from a photo. It is very similar to our motion approach, but they can create the avatar and we can then drive it with the technology in Faceware. It will give a more cohesive offering, and because of that – and because it is marker-less technology on both – we can open up our product to the consumer side, not just the prosumer. So, for example, you can have a cell phone camera driving an avatar. That’s the type of technology and products we want to start working on for the consumer side. It’s pretty exciting where we are going to be going in the next couple years.

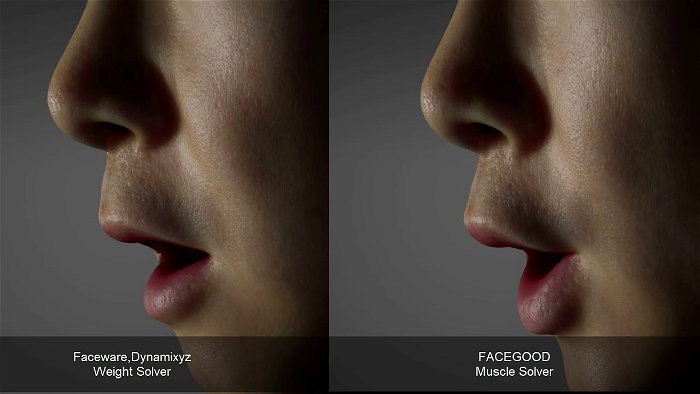

So how does your software compare to other packages in the market today?

Two main drawbacks of using motion capture optical markers (white pin balls) is you can’t put markers on your eyes, nor can you put markers on your lips – and these are the two biggest conduits of emotion. Lip thickness, lip press, if your mouth is moving roughly in-time with what you are saying, this is where a viewer reading comes from. This is where your audience connects with your content. They believe the eyes, and you see the soul of the character there. As soon as you see eyes that are dead you don’t buy it is a character.

The benefit of our technology is we are image based so everything is being captured and the biggest thing is that we are capturing eye lines. Because it is pixel based, the tech can pick up every little subtlety including the contrast difference between the whites of your eyes and your iris.

What games utilize the Image-Metrics technology?

Our company has been around for 10 years, we have been involved with over forty triple A titles and some feature films. Recently we have been involved with Halo Reach, Red Dead Redemption, Assassins Creed, Army of Two and we have a few unannounced titles coming out soon.

Since we were service based it was only the big studios that could afford to outsource work. What we have done now is to offer a lower price point. It is now cheaper, but it is the exact same software. So, you can have an animation team at an independent developer use software that helped produce the game of the year last year.

Do you need to work with any specific engine?

It is a plug-in to Maya and 3d Studio Max, since it is what animators use already it can be used essentially with any engine. How everyone gets it into engine is different so we backed off from that, but we can export to Unreal. Essentially it is an exporter from Maya or 3d Studio Max. The bigger thing is we designed a facial rig that can be exported into Unreal as-well. For the most part we don’t get too involved with the engine side of animation development.

The best part is our animation lives in Maya or Max, so there is no proprietary development people need to develop around. This allows for a much smoother integration into the development process.

Why use Faceware?

Faceware does two things very well, one of them is consistency. If you have a lot of volume and a lot of cut scenes, it’s fed off poses. So you feed it a smaller number of poses provided by a senior animator and these poses essentially define all the results, so eventually everything looks like it was produced by one animator, witch is always a challenge when working with a team of animators. You don’t want to spot the quality differences. When you use the same poses throughout work-flow you get consistent results.

When we worked on The Ballad of Gay Tony we had to produce 200 minutes in six weeks. That is daunting. We only had a team of 12 animators in Santa Monica. You set up your leads and you are able to produce much much faster.

Another thing is it allows animators to get to the fun part of facial animation. One of the things it does really well is timing. No one likes to animate lip sync, it is a pain in the butt. What you want to get to, as an animator, is pushing performance and pushing things and drawing that emotion. So what Faceware does is it gets the animator to a better starting point. To me it is better then any other method I have seen. The curves are clean, you are not cleaning up motion capture to get a performance, you have a performance, and now you can really try and embellish it. You get to be an animator and have fun again with the work. Too often you see people spread too thin and they just want to get the game done rather than getting it to a level of quality.

Continued…

Pick up a copy of C&G Monthly magazine today OR subscribe online to view our Premium Articles to read more!