The annual Google Keynote has returned today, and kicking off the huge two-hour I/O 2024 showcase is Google’s advancements to their existing AI Gemini system, introducing Gemini 1.5.

Like last year’s Google I/O Keynote, this one started with the Google CEO, Sundar Pichai, stepping onto the podium to reflect on the current state of Google’s AI technology, immediately jumping into talk about their previously announced Gemini technology (in 2023) and the advancements the technology company has made with AI during their annual Google Keynote.

Google Gemini 1.5 Pro – Google Keynote 2024

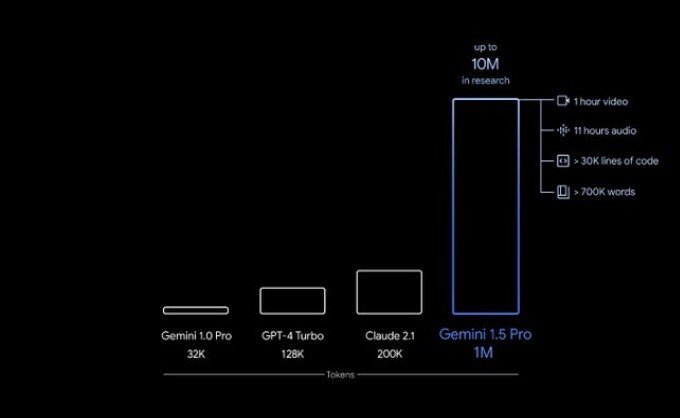

Google jumped straight into the meat of their presentation, showing their Gemini 1.5 Pro model, featuring a multimodality of over 1 million tokens (a reference to multimodal tokenization, which combines text with other modalities like audio or video), all working to accomplish the user’s needs.

The Google Keynote boasts over 1.5 million developers are using Gemini 1.5 Pro and all of Google’s “2 billion” devices use Gemini (it’s even available on iOS). People use Gemini to search in a variety of new ways, and Google has been testing this experience to increase user satisfaction. Pichai has announced they will be launching AI Overviews to help the user experience. Specific requests are stormed through in the presentation’s demonstration, using Google Photos to showcase the advancements.

Google Photos Using Gemini 1.5 Pro

The Google Keynote claims over six billion photos and videos are uploaded every single day on Google devices, and with Gemini 1.5, they’re making it easier than ever to upload, search and now, ask Gemini for a specific photo. Multimodal Tokenization mixed with the one million available tokens included in Gemini 1.5 Pro showed how simply changing up a question can change Gemini’s response.

In the example, the Pichai showed how asking Gemini can improve revisiting old memories. Instead of “Show me how Lucia learned how to swim” (which provided one photo), he said, “Show me how Lucia’s swimming has progressed,” and Google Gemini 1.5 Pro provided many photos, taken over the years of Lucia swimming and how she has advanced.

Interestingly enough, Gemini 1.5 Pro’s multimodality can also translate to Google Meet. Google used an example of a parent missing a PTA meeting, showcasing that if a person has missed an entire one-hour meeting, the Gemini 1.5 Pro user can simply ask, “What are the main points of this meeting?” and the meeting will be dissected for the truant party in an easy to read text format

Software Development with Gemini 1.5 Pro

By using Gemini 1.5 Pro, Google Keynote showed how back/front-end software developers have been able to search their code using key search terms. One developer said, “It felt like Christmas” on the ease-of-use Gemini deploys to fix code. Gemini learns who the user is and fills in the blanks from there by learning user habits and how they act.

Google’s VP of Labs, Josh Woodward then came out to discuss a new feature in Gemini called Audio Overviews. Instead of a simple summary and study guide, Gemini can now generate a narrated version where the multimodality of the Gemini system can immediately relay to the user a whole scientific scenario. Josh Woodward used the example of Basketball to demonstrate force, and Gemini gave the example requested with full narration. Below is a post made on Google’s official X (formerly Twitter) showcasing the new intelligent systems of Gemini 1.5.

The Google Keynote then revealed the improved Gemini 1.5 Pro and Gemini Advanced are available today globally in many languages. Fans can catch the rest of the announcements on the I/O Google Keynote webpage.