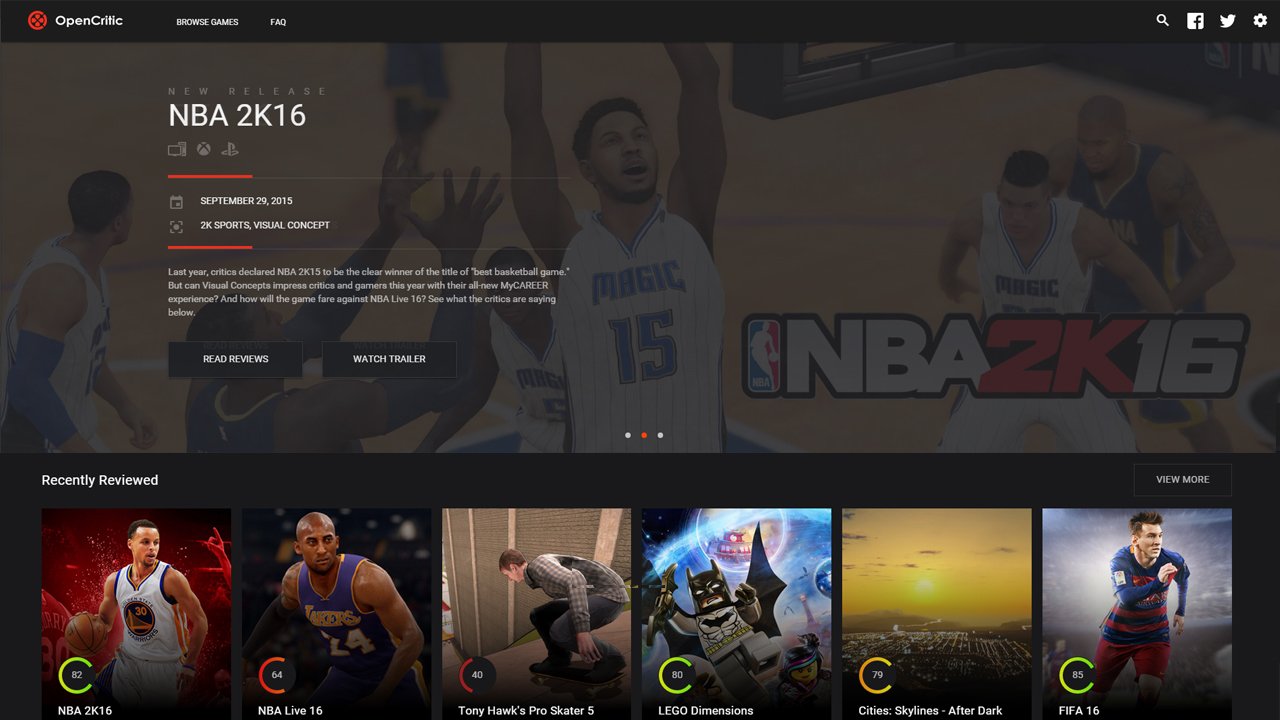

A new review aggregation has emerged from the shadows and looks to change the current model established by Metacritic.

OpenCritic is a site that compiles review data for average scores, not unlike Metacritic; however, OpenCritic looks to put a greater emphasis on the content of the reviews rather than the numbers. Their goal is to focus more on the people behind the reviews, showing the human aspect by connecting critics and gamers.

OpenCritic works like this: they have an Initializer that checks sites for historic, or archived reviews. A Listener that checks every 10-20 minutes to see if a new review has been posted, and a Snippiter that extracts the author, publication date, a quote and score from the review.

OpenCritic is made up of four people: Matthew Enthoven, the Content Manager and Business/Press Relations; Charlie Green, the Server developer; Rich Triggs, the Front-end developer; and Aaron Rutledge, the UX designer. CG spoke with Matthew to get an insider look at OpenCritic.

CGM: So how and why did you guys get started?

Matthew: So, how we got started actually has nothing to do with videogame reviews. It started with a problem I was having at work, and I would read a lot of news in the industry and I would go to websites like Kotaku or even CGM and I was like, “You know what, I really want a place where I can just get the core gaming news; I don’t want to see cosplayers, I don’t want to see comic-book stuff I just want the big [gaming] news for the day.” And so we initially started out like, “Hey, we’re going to aggregate news” and that was what started the project last November. I went to a high-school friend and was like, “hey, you want to do this?” and he was like, “Yeah!” Then we got to January or February and we were pretty far along, and when we looked at the website then, it was kind of like a Flipboard type thing. We were like, “You know what? When we’re looking at this, all we’re ever reading are the reviews. We don’t pay attention to any of the news stuff because our review content is so compelling and so well done here.”

So when we started to think about the broader industry and how reviews are currently treated and aggregated, we were like, “you know, this could be an opportunity for us to do something really positive, to really try and help humanize critics, to help gamers get a better picture of how a game is scored and reviewed, and give them a better understanding of how this stuff works.” That was a big pivot point for us and we started focusing only on reviews and now we’re here. We really do believe that this is an opportunity to help be an inflection point for how the conversation between critics and gamers happens; that we want to make sure that every gamer can have a healthy dialogue with someone who’s reviewing the game.

CGM: Were you dissatisfied with the current Metacritic-type sites?

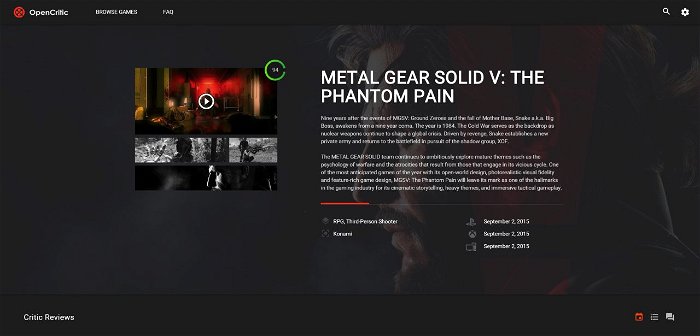

Matthew: Yes. Metacritic is particularly frustrating in its black box nature. I was a Metacritic user for a long time and eventually I stopped because I honestly just didn’t understand why they made the certain decisions that they did. Why are certain outlets rated higher? Why am I unable to get a complete picture of a game on one page? And why is there this language barrier? I had to find reviews that were in English, and it didn’t seem to paint a full picture. They were missing publications like Rock, Paper, Shotgun. We wanted to try and get that complete picture; one place you can go and see what all the critics are saying and digest it and actually understand everything that was said about that game.

CGM: Now, I noticed one of your features being “user review” aggregation. Given an example like Steam, some would argue user reviews are less than reliable. Do you think this might hinder the overall “metacritic” scores/reviews?

Matthew: Well, let me start by saying, just to be clear, we currently do not have user reviews on the website. The idea that we have is aggregating user reviews across lots of different places. For user reviews, we think there’s a lot of work to be done there. We completely agree with you that, right now, the way user reviews are presented, it is not really satisfactory. We’ve observed through our own data collection—because we have started working the user reviewed games and looking at and understanding how Steam and Amazon work—we’ve seen that most people are pretty polarized. They’ll only give a product five stars or one star. And so, Steam probably observed that as well, which is why they only have a thumbs up/thumbs down. We’d look a lot at Rotten Tomatoes; Rotten Tomatoes asks “hey, would you recommend this movie to a friend, and if you say yes or no, their percentages are aggregate of that.” I think whenever we choose to do user reviews, we’re going to have to find a way to make sure we do it right. Then we’ll add the pieces that it needs to be clear what your response is. It needs to be accurate and scientific; it needs to ensure that you actually played the game. I think it would be something we would be interested in doing, like if you want to do a user review you have to link your Steam or PlayStation or Xbox account so we can verify that you played the game. Those are some of the ideas we’re playing with, but I think right now we’re solely focused on critic reviews at our launch.

CGM: Will OpenCritic focus mostly on written content, because I know video reviews are becoming more and more popular. Is that something that’s going to be taken into the aggregation?

Matthew: We do have two video critics: Totalbiscuit and Angry Joe, and their videos are embedded directly into their own review card. We’re trying to figure out the right way to set standards on videos in particular. We want to make sure that any critics who are invited onto the website have a following, are trusted by their audience, and their audience is somewhat large. We’re still trying to figure out how we get there. We really don’t draw distinctions between video reviews and written reviews, but we do have this expectation that there is a “quality bar;” you have a following, there’s consistency in how you talk about games, your review content is helping people make a purchase decision. For a long time, we talked about let’s plays and if we wanted them to be included, and we openly decided not to because we felt like they weren’t helping drive purchase decisions; they were more of a “I just want to see what this game would have been like.” But we may do let’s plays in the future; nothing’s set in stone.

CGM: Now, it seems like OpenCritic will actively be working with journalists, one of your features being publishing review embargo times. Will you be continuing this, posting press releases or will it strictly be review focused?

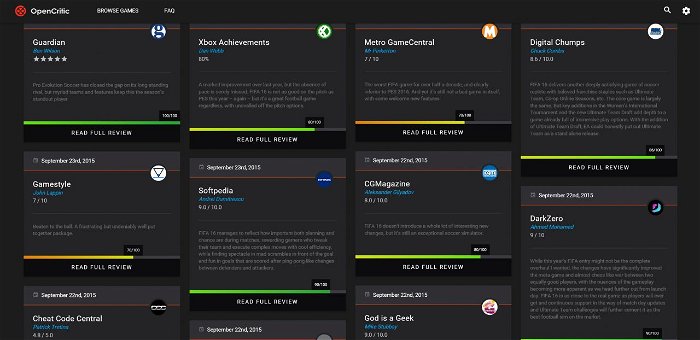

Matthew: We are definitely solely focused on reviews. We do not plan to create any of our own content. We are definitely focused on being an aggregator; we’re here to give people a complete picture. We want critics to be able to control their content. One of the key themes we brought up early in the design of OpenCritic was that we want to preserve “outlet identity” as well as “critic identity.” We might not be all the way there yet in terms of the critic identity, but we felt that it was very important to represent the person and the publication in a genuine way. So, an example is: Metacritic converts all the scores out of 100; we do too for the purposes of calculation, but we also display whatever that score format is. So, CGM uses a scale of zero to 10 including decimal points, so when we show CGM’s score, we’d show 8.0/10. Contrast that with Gamesradar, which uses a star system, showing zero to five stars, and we show the stars. We try to maintain that outlet identity going forward; we want journalists to control their own content. We’re working on making it so journalists will have a login portal so if they publish a review they can come and change the stuff that is shown. We want journalists to feel like they have control over how this stuff is displayed.

CGM: So will OpenCritic work in the same way that MetaCritic works where it’ll compile all the numbers and give games that average or “mean” score?

Matthew: Yes, it’s a straight average. We’re not doing any hidden ratings. In fact, one of the things you’ll be able to see if you click any score orb and it’ll show you how that score is calculated in a very scientific way.

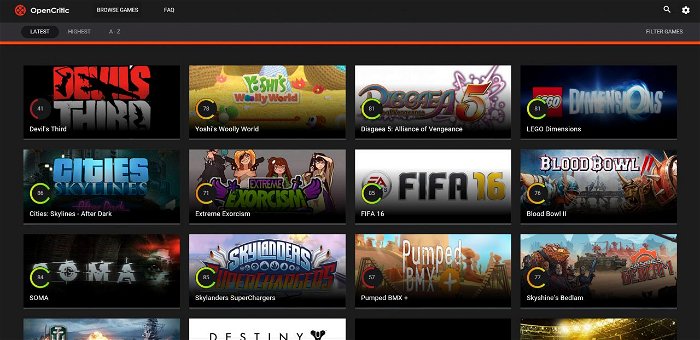

CGM: Do you think it’s possible then for sites like this to have a personal feel for a site that essentially “crunches the numbers?”

Matthew: Yeah, we are kind of worried about that. I think at our core we think that review aggregators are something that are not going to go away. If you look across all industries, you know, if I’m going out to dinner tonight, I’m whipping out Yelp and looking for a restaurant that has four stars. If I want to see a movie, I’m going to Rotten Tomatoes and checking out what movie has the best fresh meter. If I’m going to buy something off Amazon, I’m going to look at that aggregate score. What we’ve tried to do is refocus quite a bit. We intentionally tried to make the score orb have very little visual weight. We’ve made the reviews themselves have a lot of visual weight; we have a policy of not embedding score orbs into the masthead. I think there are going to be people that think “Halo 5 reviews are today, what’s the score on OpenCritic?” and they just come here and check Halo 5 and bounce. I’m not sure if that pattern is going to change. We’re trying to figure out ways to do that; we’re hoping that some of the stuff we’ve already done makes an impact.